If you write JavaScript, you felt it: a wave of alerts, frantic greps through lockfiles, and a long evening of incident response. On September 8, a maintainer’s npm account was phished, and attackers briefly pushed malicious updates to 18 popular packages, including long-standing staples like chalk and debug. The payload primarily targeted browser contexts—hooking APIs and wallet interfaces to silently redirect crypto transactions—but the blast radius extended to any build or app that consumed those poisoned versions during the short window they were live.

I want to offer three things here:

- a crisp, accurate summary of the attack (without sensationalism),

- a pragmatic playbook you can act on immediately, and

- a broader perspective that supports maintainers while hardening our pipelines.

First, what actually happened?

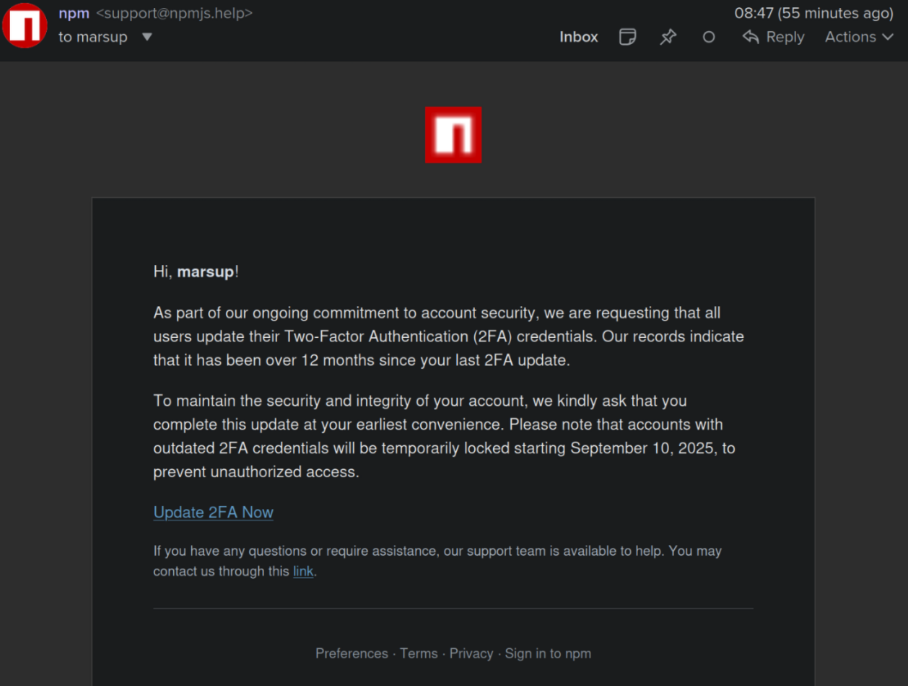

The attack chain: An open-source maintainer—well known for utilities many of us transitively rely on—was tricked by a phishing email that spoofed npm support, using the domain npmjs.help. The lure asked them to update 2FA; when they followed the link and supplied credentials plus a TOTP code, attackers took over the account and published malicious versions to widely installed packages. Screenshots, timing, and additional IOCs (including references to infrastructure used by the phish) were shared publicly and corroborated by multiple security writeups.

Impact scope: For a short window on September 8 (various reports put it around late morning to early afternoon US Eastern time), malware was live on chalk, debug, and a constellation of utility packages they depend on. Collectively, these packages see roughly 2–2.6 billion weekly downloads—which sounds apocalyptic until you remember that most production builds are pinned or CI-reproducible and that the community moved quickly to pull or deprecate the bad versions. Still, if you ran fresh installs during that window or auto-bumped, you should investigate.

What the malware did: The added code sat in the packages’ entry points (e.g., index.js) and hooked browser-side primitives like fetch, XMLHttpRequest, and window.ethereum. In sites that ended up serving code built with the compromised packages, it could intercept wallet interactions and rewrite payment destinations (ETH/SOL/BTC were referenced) to attacker addresses. This is a front-end threat path: your server won’t run the stealer, but your users’ browsers might if you shipped a build that included the poisoned versions.

Confirmed compromised versions: independent researchers compiled and verified a list. If your lockfiles contain any of the versions below, treat them as malicious:

[email protected]

[email protected]

[email protected]

[email protected]

[email protected]

[email protected]

[email protected]

[email protected]

[email protected]

[email protected]

[email protected]

[email protected]

[email protected]

[email protected]

[email protected]

[email protected]

[email protected]

[email protected]

[email protected]

Several credible sources published this exact set, along with version-pinned IoCs you can search for in your repos and artifacts.

Who is actually at risk?

This point matters because it shapes the response you should take today:

- Web apps that shipped a build created during the exposure window and that incorporated any of the listed versions could have served malicious front-end code to users. Your risk is higher if your pipeline runs

npm install(floating) rather thannpm ci(lockfile enforced) during CI. - Developers running local installs during the window likely executed the package code during build/test (Node). The stealer’s target is the browser, but local environments and CI still warrant telemetry/log review and credential rotation if you pulled in the bad versions.

- Consumers of server-only code are less exposed to the crypto-drainer behavior—but if you publish libraries or ship isomorphic code, you still need to audit and roll back as appropriate.

Bottom line: check your installs (lockfiles and artifact provenance) and take proportionate action. Panic helps no one; focused validation does.

How to quickly assess your exposure

1) Freeze new installs. In CI, switch to npm ci if you’re not already using it. This enforces your lockfile exactly and prevents surprise bumps.

2) Search your lockfiles for the packages and versions above:

# npm and pnpm lockfiles (JSON)

grep -E '"(debug|chalk|ansi-styles|ansi-regex|supports-color|strip-ansi|wrap-ansi|slice-ansi|chalk-template|supports-hyperlinks|has-ansi|is-arrayish|error-ex|simple-swizzle|color|color-convert|color-string|color-name|backslash)"\\s*:\\s*"(4\\.4\\.2|5\\.6\\.1|6\\.2\\.2|6\\.2\\.1|10\\.2\\.1|7\\.1\\.1|9\\.0\\.1|7\\.1\\.1|1\\.1\\.1|4\\.1\\.1|6\\.0\\.1|0\\.3\\.3|1\\.3\\.3|0\\.2\\.3|5\\.0\\.1|3\\.1\\.1|2\\.1\\.1|2\\.0\\.1|0\\.2\\.1)"' \

package-lock.json pnpm-lock.yaml 2>/dev/null

# yarn classic

yarn why chalk debug strip-ansi ansi-styles ansi-regex supports-color wrap-ansi slice-ansi color color-convert color-string color-name chalk-template supports-hyperlinks has-ansi is-arrayish error-ex simple-swizzle backslash

3) Use a community rule to flag the IoCs. Semgrep published a free, MIT-licensed rule aligned to the package+version list. If you already have Semgrep set up, this is the fastest path to a repo-wide check:

semgrep --config r/kxUgZJg/semgrep.ssc-mal-deps-mit-2025-09-chalk-debug-color

(That rule targets the versions explicitly; you can also extend it to grep built assets for tell-tale obfuscated snippets.)

4) Inspect built web assets if you suspect a contaminated build was deployed. Look for new, obfuscated code chunks referencing wallet APIs, fetch, or XMLHttpRequest hooks. Your source might look clean now if you’ve reverted—so keep artifacts or consult your artifact repo to diff the exact bundles that went live.

If you find a match: a measured incident response

Stop the bleeding.

- Temporarily block fresh installs (freeze deploys, favor

npm ci, pause automatic dependency bumps). - In a monorepo, quarantine affected packages and prioritize apps that actually ship to browsers.

Roll back and pin.

- Revert deploys built with compromised versions.

- Pin to the last known-good versions using

overridesin your top-levelpackage.jsonto force safe transitive versions across the tree:

{

"overrides": {

"chalk": "5.6.0",

"debug": "4.4.1",

"ansi-styles": "6.2.1",

"ansi-regex": "6.2.0",

"supports-color": "10.2.0",

"strip-ansi": "7.1.0",

"wrap-ansi": "9.0.0",

"slice-ansi": "7.1.0",

"chalk-template": "1.1.0",

"supports-hyperlinks": "4.1.0",

"has-ansi": "5.0.1",

"is-arrayish": "0.3.2",

"error-ex": "1.3.2",

"simple-swizzle": "0.2.2",

"color": "4.2.3",

"color-convert": "3.1.0",

"color-string": "2.1.0",

"color-name": "1.1.4",

"backslash": "0.2.0"

}

}

Reinstall from scratch:

rm -rf node_modules package-lock.json pnpm-lock.yaml yarn.lock

npm ci # or pnpm i --frozen-lockfile / yarn install --frozen-lockfile

(The overrides field is part of npm and lets you force transitive dependency versions; it’s documented in npm’s package.json reference. Consider removing overrides later, once the ecosystem stabilizes.)

Rotate secrets—judiciously.

- If a web build with the malware was served to users, treat any client-side secrets (API keys in front-end contexts, wallet session data) as potentially exposed and rotate accordingly.

- If your CI or developer machines installed the compromised versions, rotate NPM tokens, GitHub tokens, SSH keys, and anything resident in env files or credential stores that your build tools touch. (The Nx incident two weeks ago showed how malicious

postinstallcan ruthlessly exfiltrate developer secrets; see context below.)

Communicate with empathy.

- Update your status page/changelog succinctly.

- Avoid blame; acknowledge the root cause (phishing at the maintainer level), explain mitigations, and commit to concrete improvements.

Why this keeps happening—and why blaming maintainers won’t fix it

It’s natural to ask “how could this happen?” The reality is that well-crafted phishing beats TOTP 2FA far too often. If you enter a one-time code on a fake site, attackers can replay it. Stronger, phishing-resistant 2FA (hardware-key/WebAuthn) helps, but the real pivot is architectural: stop relying on long-lived tokens and human logins to publish in the first place.

Trusted Publishing + Provenance: the right defaults

npm Trusted Publishing (generally available) lets you publish from CI using OIDC, removing long-lived npm tokens from the equation and automatically generating provenance attestations for public packages. With provenance, consumers can verify where and how a package was built. Adopt it if you maintain packages; require it (or at least prefer it) in your dependency policy if you’re an enterprise consumer.

A telling case study: the Nx team’s post-mortem from late August. Attackers exploited a GitHub Actions injection to steal a classic npm token and publish malicious nx builds for ~4 hours. In response, Nx moved to Trusted Publishing and added manual approvals for releases—exactly the direction the ecosystem needs.

A practical hardening checklist (you can start this week)

For maintainers (publishers):

- Turn on Trusted Publishing for your packages; let CI authenticate via OIDC. (npm docs link out to GitHub/GitLab integration steps.)

- Require WebAuthn (security-key) 2FA for any interactive account actions; prefer automation over human logins for publish.

- Sign and expose provenance; teach consumers how to verify it (

npm audit signatures, or sigstore tooling likecosignagainst bundles). - Review release workflows: remove

workflow_dispatchwhere not needed; set GitHub Actions permissions: read-only by default; avoidpull_request_targetunless you truly need it. (Nx’s timeline shows how small defaults compound.)

For application teams (consumers):

- Reproducible installs in CI: use

npm ci/pnpm --frozen-lockfile/yarn --frozen-lockfileto avoid surprise transitive bumps. - Guardrails on dependency drift: gate merges on lockfile diffs; alert on sudden jumps in trusted “core” utilities like

chalk/debug. - Block obvious malware patterns in bundles: lint for obfuscated evals in first-party code; scan for suspicious additions to

index.jsof transitive packages (Semgrep showed how subtle but grep-able this can be). - Prefer packages with provenance (policy + tooling). Favor projects that publish from CI with attestations; de-prefer manual local publishes.

- Limit token scope and lifetime in CI. Even if something sneaks through, a short-lived, least-privileged token curtails blast radius.

For security and platform teams:

- Maintain a central allowlist/proxy for registries so you can quickly pin or block versions across orgs.

- Set up fleet-wide SBOM + diff so you can ask “Which build pulled

[email protected]?” without grepping logs for days. - Run scheduled dependency health checks (e.g., daily Semgrep/SCA run with the current IoCs during active incidents).

“But we pinned!”—why some strategies don’t guarantee safety

Pinning is often presented as a cure-all. It helps, but research shows pinning direct dependencies in npm can paradoxically increase exposure in large graphs, due to how resolution and transitive updates interact. It’s still worth pinning during an incident, but the ecosystem needs provenance, reproducible builds, and publish-time controls more than it needs blanket pinning.

Think of pinning as a seatbelt: essential, but you still need brakes, airbags, and a lane-assist too.

Context: the Nx compromise from late August

If this week felt familiar, it’s because the ecosystem is still digesting August’s Nx incident. There, attackers briefly pushed malicious nx versions via a stolen publishing token. The malware executed on install, scraped developer environments (SSH keys, npm/GitHub tokens, env files), and even abused AI CLI tools to help with reconnaissance and exfiltration—then stashed loot in a public repo on the victim’s own GitHub account. That made triage messy but also provided a forensic trail. Nx’s writeup is exemplary in transparency and remediation detail.

Why mention Nx in a post about chalk/debug? Because both incidents underline the same lesson: people are fallible; defaults must be safer. Tokens leak; phishing beats TOTP; manual publishes are fragile. Trusted Publishing, provenance, and least-privilege CI are how we shrink the blast radius next time.

Support the humans behind your stack

There’s an unhelpful reflex after every OSS incident: “the maintainer was careless.” It’s inaccurate and counterproductive. This attack was professional social engineering with a convincing domain and time-boxed urgency language. The maintainer responded quickly, packages were pulled fast, and the community collaborated on detection rules and guidance within hours. The right response now is support + hardening, not pile-ons.

Concretely:

- If your company depends on these libraries, sponsor them. A few hundred dollars a month isn’t charity; it’s risk management.

- Offer security help (threat modeling release workflows, turning on provenance, automating tests).

- Avoid “hot take” culture. Share verified guidance (like the Semgrep rule, npm docs for Trusted Publishing), not rumors.

A step-by-step today/tomorrow plan

Today (90 minutes):

- Freeze installs in CI and prod deploys; switch CI to

npm ci. - Scan your repos with the Semgrep rule and grep your lockfiles for the specific versions listed above.

- If you find a hit, roll back, pin with overrides, rebuild clean, and redeploy.

- If a contaminated web build went live, rotate client-facing secrets and publish a brief status note.

This week:

- Inventory builds created on Sept 8 (UTC morning to afternoon). Diff bundles for new obfuscation/wallet hooks; validate artifact signatures where possible.

- Rotate CI credentials in affected pipelines; audit for over-privileged tokens.

- Enable Trusted Publishing for anything you own that publishes to npm; add a manual approval step if needed.

- Create a policy to prefer dependencies with provenance attestations and to block unsigned/manual publishes for critical packages where feasible.

This quarter:

- Roll out hardware-key/WebAuthn org-wide for dev accounts.

- Stand up a private registry proxy with block/allow policies and organization-wide overrides during incidents.

- Invest in SBOM + continuous diff so your question becomes “which releases included X?” instead of “did we ever install X?”

- Sponsor the OSS – Open Source Software you depend on and offer release engineering time to move them onto Trusted Publishing and provenance.

Final thoughts

Incidents like this are unsettling because they touch the trust fabric of modern software. But the sky isn’t falling. The timeline was short. The community response was fast. And the fixes we need—Trusted Publishing, provenance, reproducible installs, hardware-key 2FA, and least-privilege CI—are available right now.

We’re not going to eliminate phishing or human mistakes. We can design our pipelines so a single phished TOTP can’t push malware to millions, and so consumers can cryptographically verify what they’re installing. That’s how we honor the work of maintainers: by supporting them and engineering safer defaults around them.

Stay kind. Ship safer.

Sources & further reading

- Aikido’s analysis, including timeline, IoCs, the

npmjs.helpphish, and deobfuscated snippets of the injected code (Sept 8). Aikido - BleepingComputer’s incident coverage with affected package list and browser-hook details (Sept 8). BleepingComputer

- Semgrep’s advisory with the exact bad versions and a free detection rule. Semgrep

- Nx post-mortem (Aug 26) showing why token-based publishes remain brittle, and why they moved to Trusted Publishing. Nx

- npm docs on Trusted Publishing and provenance (GA), and how consumers can verify. npm Docs+2npm Docs+2